What is tail latency ?

Tail latency is the high percentile latency of your application. Essesntially the P99 or P99.9 latency. This translates to time taken for your application to respond back to the 99th or 99.9th percentile of your requests.

Why does it matter ?

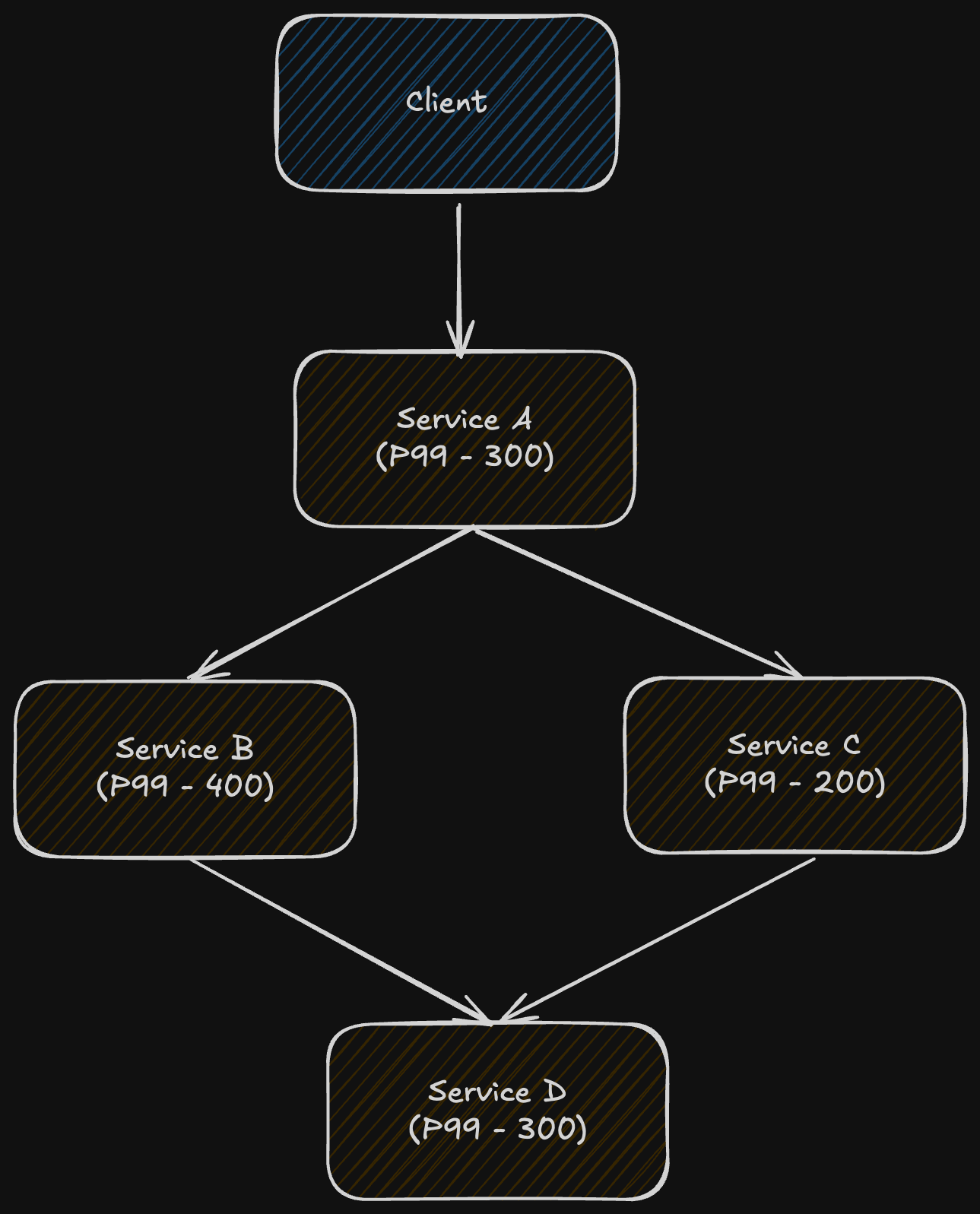

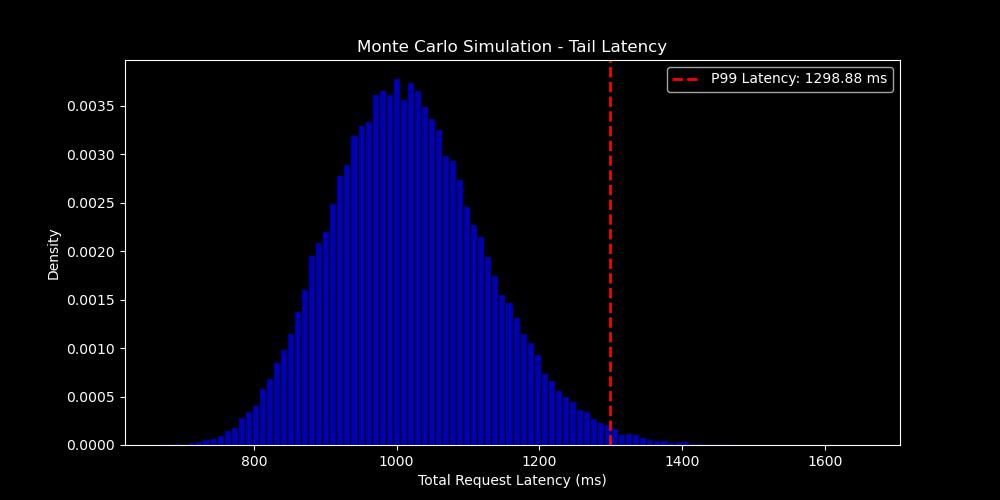

You might wonder "why should I care about improving the latency of 1% or 0.1% of my requests?", however, it does matter because if you are serving a good amount of customer traffic, then the number of customers who end up with a bad experience due to high latency is higher. In a distributed system or in a micro-service architecture, tail latencies can compound across the different servcies, resulting in a larger group of customers experiences significant delay in responses. The following graph displays the P99 latency after running a Monte-Carlo simulation across the P99 latencies in a micro-service architecture.

What causes high tail latency

High tail latency can be caused due to multiple reasons, some of which are garbage collection, network issues, resource failure and contention etc. For this blog, lets mainly explore how garbage collection and resource contention affects tail latency

Garbage collection

It is process of freeing up memory that is no longer required for the program. How garbage collection is done differs based on the programming language used. Java, Javascript and Go uses a generational garbage collector where objects in the heap move up generations based on whether they survive a garbage collection cycle. In Go and Javascript (basically Node), garbage collection occurs in parallel with your application code, however, in Java garbage collection pauses the execution of your application threads in order to perform the clean up. This is referred to as Stop-The-World pauses. The pauses induced in the application threads by garbage collection can affect your latency metrics in ways which we cannot really do much since we do not have a control on when GC is executed in these languages. Note: GC in Javascript and Go also pause application threads, however, they are fairly optmized in such a way that the pause on application are short.

In Rust programming language, memory is managed during compile time, i.e. there is no runtime that is allocating/free-ing the memory as the Rust compiler compiles code straight to machine binary. This makes Rust a great choice for optimizing applications that are sensitive to tail latency as memory management becomes predictable (as long as you are writing safe rust).

Resource Contention

CPU resource contention usually occurs when there is a huge number of requests coming in to your server. This results in a backing up of your requests before it can be processed by the CPU. Note that GC can also cause resource contention with the application threads as it is always scheduled by the runtime to free up memory. To avoid backing up of requests, you can simply create more threads, however, this comes at a cost of higher memory usage, especially if your runtime hand-off thread creation and management to the OS (e.g. JVM).

Contention can also happen on other aspects of your application code. For example, if your applications performs a lot of logging or file read/write to disk it can cause buffers to fill up and slow down your application response time. Disk I/O is significantly slower compared to memory I/O, therefore, it is important to ensure it is done asynchronously (if possible) and minimally.

Conclusion

Optimizing the tail latency of your application is important as it forces you to understand the memory management of your program and also profile your to understand the other I/O bottlenecks that can be slowing down your application.